Secure memory

% Remco Bloemen % 2014-05-28, last updated 2014-06-09

How can you make this harder in software?

Sensitive information

(Section is work in progress)

What kind of sensitive information can there be stored in memory?

Passphrases, private keys, session keys. Crypto algorithm state. Decrypted content.

Keys and passphrases are small, on the order of 32 bytes. Crypto algorithm states can be a lot larger.

How you get those sensitive passphrases securely into the computer’s memory is beyond the scope of this article.

TODO

Stealing sensitive information

Is it ‘stealing’ when the attacker merely copies a key? Well, the attacker gained something of value to him and the original owner is left with a key that is now worthless. Attacker gains, owner looses, so you could argue that it is indeed stealing.

Suppose you have sensitive information, say credit card records in a database. I’ll assume you did not store it unencrypted on the disk, but at some point the information needs to be stored unencrypted in the computers working memory (RAM). What are the different ways in which an attacker can steal this information when it is in the working memory?

Social engineering: Probably the easiest way to get sensitive information is by just asking for it, possibly under a false pre-text. There are many different tricks that can be employed, from phishing by mail to carefully crafted and personalized attacks. This is an important subject, but in this article I am going to focus on the low-level software engineering aspects of securing sensitive information, whereas this is more in the equally important realm of user interaction design.

Software bugs: There are many ways in which an application itself can contain bugs that allow an attacker to read out its sensitive information. I will not try to list all the different kind of bugs here; software bugs range from bad implementations that store or transmits the sensitive information unencrypted, to buffer overrun bugs such as Heartbleed, to more subtle things such as leaking information from secured heap to the less secure stack due to register spilling. There are techniques that can drastically reduce the impact of bugs, many of which will be explained in the next section of this article.

Operating system bugs: Modern computer processors have memory protection that prevent different processes from accessing each others memory. Setting up those access rights requires privileged instructions that are only available to the operating system and drivers. In particularly drivers of poor quality may contain bugs that can allow one process to gain access to another process' memory.

Timing attacks: The amount of time a computer spends on something can reveal a bit about what it is doing. In ‘cache missing for fun and profit’ the author demonstrates how an attacking process can deduce how a target process is accessing its memory and consequentially what it’s RSA private keys are. Timing attacks have also been applied to AES (paper) and remotely by measuring how long it takes a server to respond (paper).

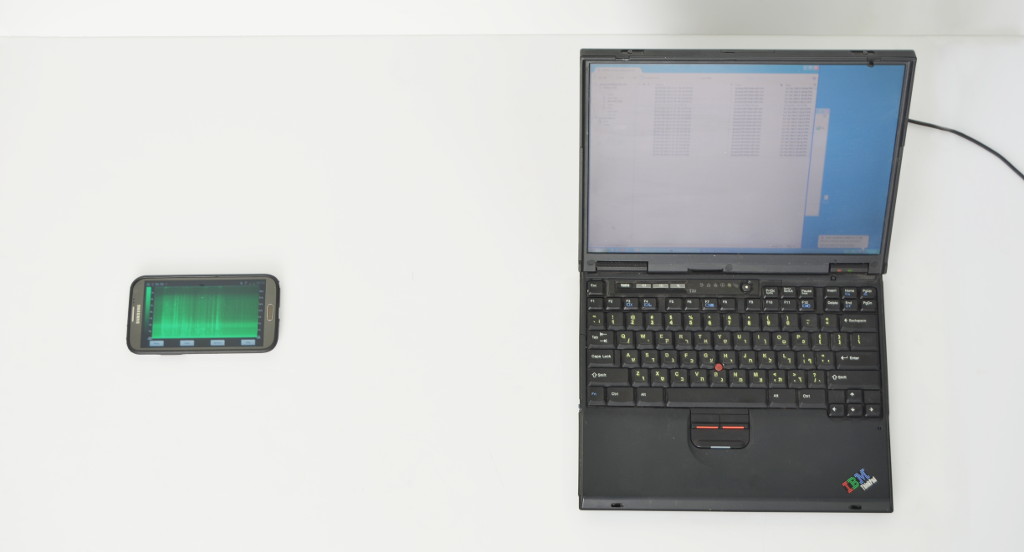

EM/power/acoustic attacks: In a wonderful study done by Daniel Genkin et al. (source), the researchers explored different methods of extracting sensitive information by listening to emitted noise in electromagnetic and acoustic fields. They set up a normal laptop and encryption software, and made it decrypt specially selected data. The 4096 bit private key was then stolen using: a smartphone microphone at 30 cm, a sensitive lab microphone at 4m, through high level Tempest shielding, and by measuring the electrical noise on the far end of a 10m ethernet cable. Together with the timing attacks these are also known as side channel attacks.

Hibernate files: When the computer goes to a deep sleep, it likes to turn off power to the memory. Before it can do this, it needs to store the contents somewhere else, that is, on the hard disk. When the computer goes out of sleep, it will restore the memory contents and continue where it left. This is a common and useful trick in battery operated devices, but in the process it writes all the sensitive information to the hard disk. The sensitive information can then be read out through physical access or special software.

Swapping: Much like hibernation, when the operating systems wants to free up some memory, it will move some of the memory contents to disk, to be reloaded when it is needed again. In modern operating systems this will happen even when the memory is not entirely full. The consequences are the same as with hibernation.

Debuggers: The system calls

ptrace and

ReadProcessMemory

allow one process to inspect another process' memory. These system calls

are meant for debugging and should require special privileges over the

process being observed. But bad security practices, such working under

and administrator account, can result in all processes having this debug

privilege.

Core dumps: Most modern operating systems produces a crash report when an application fails. The report usually includes the point of failure, the state of the processor, a stacktrace, and sometimes a dump of the memory. All of these can contain sensitive information. Crash reports should be considered as confidential as the content in the application. Automatically uploading crash reports to the application developers can hand over sensitive information to a third party, and if not sufficiently secured, anyone eavesdropping.

Virtualization: A hypervisor is a piece of software that creates virtual machines. The hypervisor has full access to the virtual machines memory, unbounded by the guest’s protected memory restrictions. This is used to store the machine’s state to disk, much like a hibernation file and with the same implications. Even if the virtualization software itself is not malicious, it can contain exploitable bugs. A notable example is the Computrace anti theft service, which comes factory installed on “most notebooks sold since 2005”. The hypervisor is embedded in the BIOS and can not be removed. It has a design weakness that can allow an attacker to install a rootkit.

Rootkit: Rootkits are malicious hypervisors, using the powerful position a hypervisor has to attack the virtualized system. A rootkit like Blue pill or Stoned will, when executed, install itself as a hypervisor and move the system to a virtual machine. From here the system is unaware that it has been moved to a virtual machine or that Blue pill is running in the background. Once installed, the rootkit has full control, it can connect to the internet, steal disk encryption keys and log keystrokes.

DMA attacks: Direct memory access (DMA) allows computer hardware to access memory directly, bypassing the processor. This frees the processor up to do other work while big memory transfers happen in the background. It is used by internal devices such as hard drives, network cards and graphics cards, and external ports such as Firewire, ExpressCard and Thunderbolt. eSATA and USB make use of DMA too, but restrict what a device can do to a safe subset. Once a device has unrestricted DMA access, it can bypass all of the memory protections build in the operating system and read out the entire memory. Inception is a free tool to extract memory contents over Firewire and search for disk encryption keys.

Cold boot attacks: In a cold boot attack the attacker quickly restarts the machine into a state where he can read out the memory contents. This works because the contents of the memory are not cleared during a restart and will retain sensitive information for seconds while the power is off. The Center for Information Technology Policy provides free tools to boot into a special operating system and find RSA and AES keys. Frost is a similar tool for Android devices.

Bus sniffing: While getting a bit ridiculous, it is possible to physically open a running computer, attach wires on the motherboard at strategic places and simply eavesdrop on the communications to and from the memory. The bus clock speed for DDR3 RAM is about one GHz, which is within the range of the good logic analyzers.

Protecting sensitive information

(Section is work in progress)

First two general tricks that will become handy later when using some more advanced techniques. Both these tricks assume it is possible to generate keys securely.

Reducing the size of the information: If you want to store several megabytes of sensitive information securely, the size will preclude some of the tricks below. Yet you may still want to benefit from their added security. To do this you generate a new key, and authenticated-encrypt the information with this key. The encrypted information can be stored in non secure memory, even on disk. Only the tiny encryption key needs to be stored in a secure location. The downside is that you need to decrypt and encrypt every time you read or modify the information. You also still need a secure place to store it while accessing, but for much shorter time frames and possibly just a subset of the information.

Combining two locations: Suppose you have two different locations where you can store something relatively securely. You would like to combine the strengths of both. However, this is not trivial. If you would simply store half the sensitive information in one location and half in the other, then you did make it harder to get the full sensitive information (it would require stealing both locations) but you made it easier to obtain some information (it would require stealing either one of two locations). To really combine the strengths of the two locations you generate a key, authenticated-encrypt the sensitive information with the key, store the key in one place and the encrypted data in the other. You now need both pieces to gain access to the original data. A common implementation uses a xor based one time pad, which does not provide integrity guarantees and still allows an attacker to change the information given access to one location, even if he can’t read it.

API Design: First and foremost a good API design with proper abstractions can make a world of difference. Create one interface that is responsible for all the logistics of sensitive information. All of the alloc/free/zero logic should be contained here. Many of the mitigations below can simply be implemented in the constructors/destructors of this class and will then be effective everywhere. Casting between secure and unsecure memory should be explicit, or perhaps even disallowed.

Mitigates: Software bugs, and makes all the other mitigations more effective.

Clear the memory: Memory should be cleared as soon as possible and

definitely before it is released (See CERT

MEM03-C

). Having the sensitive information in memory for the minimal amount of

time reduces the exposure to many attacks, and clearing it before

release prevents accidental leakage when the memory is reused. Note that

a simple call to std::fill is not sufficient. The compiler might

optimize this away, especially when the memory is freed immediately

after. To prevent this the pointer can be declared volatile or, under

windows,

SecureZeroMemory

can be used. (See CERT

MSC06-C

)

Mitigates: Software bugs, and reduces chances of other attacks.

No sensitive indexing: Memory access patterns that depend on sensitive information should be avoided since these open opportunities for side-channel attacks. Take lookup-tables for example, they can be redesigned by, always looking up all entries and selectively multiplying the entries by zero or one. You should be careful about compiler optimizations and the performance impact. If access patterns can not be made secure, they can be mitigated a bit by simply prefetching all the sensitive information in the caches before usage.

Mitigates: Timing attacks, EM/power/acoustic attacks.

No sensitive branches: Do not have control flow depend on sensitive

information. A good example is memcmp, this function will return on

the first difference it finds between two byte arrays. Its timing

therefore reveals the length of the common prefix. Just like with

indexing, you have to be careful about compiler optimizations when

working around sensitive branching.

Mitigates: Timing attacks, EM/power/acoustic attacks.

Canaries: Out of bounds writes can sometimes be detected using canaries. These are constants placed before and after the buffer. If the application overwrites these with different values, this will be detected later on. It should be hard for an attacker to predict the canary values. They are very useful in finding and mitigating buffer overflow bugs.

Mitigates: Software bugs.

TODO: Explain pages. 64k size.

Guard pages: Guard pages are like super canaries, they are placed before and after the data and will detect unauthorized or accidental writes beyond the buffer. Unlike canaries, guard pages can also detect reads and do not rely on unguessable values. However, guard pages must be page aligned and can’t always be placed directly before or after the data, leaving a gap in the protection. Guard page are implemented at a very low level in the operating systems virtual memory manager, and can therefore catch a wider range of bugs.

Mitigates: Software bugs, operating system bugs.

Pages access: Processes can get fine grained control over the access

rights to particular sections of memory using

mprotect in

Posix and

VirtualProtect

in windows. Pages can be marked as inaccessible, read only or read-write

(amongst others). For example, guard pages can be created by marking a

page as inaccessible. In addition, on Posix systems

madvise can be

called with the options MADV_DONTDUMP and MADV_DONTFORK to prevent

specific pages from ending up in core dumps and child processes

respectively.

Mitigates: Software bugs.

Locked pages: Pages can be protected from mlock in Posix and

VirtualLock in windows.

http://blogs.msdn.com/b/oldnewthing/archive/2007/11/06/5924058.aspx

Mitigates: Swapping and sometimes hibernate files.

Operating System encryption: Windows provides two functions

CryptProtectMemory

and

CryptUnprotectMemory

that encrypt/decrypt a block of memory using what appears to be an

process specific encryption key hosted in the operating system. The

functions are specifically designed for information that should not be

written in plaintext to the disk when hibernating or swapping. The Linux

equivalents are

add_key,

kectl_update,

keyctl_read

and

keyctl_clear

keyctl_invalidate

https://stackoverflow.com/questions/15555691/confusion-on-the-usage-of-add-key-linux-key-management-interface

Mitigates: Software bugs, swapping, hibernate files, core dumps and possibly more depending on the operating system implementation.

Disable debuggers: Under Linux a process can disable core dumps and

debugging by disabling PR_SET_DUMPABLE using the

prctl call. A

similar thing can be done Mac OS and iOS using the

PT_DENY_ATTACH

option to ptrace. Under Windows it is possible to find the presence of

debuggers using

IsDebuggerPresent

and prevent them from being created using

CreateProcess

with the appropriate access rights.

Mitigates: Debuggers.

Disable core dumps: Under Posix operating systems, core dumps can be

disabled by setting RLIMIT_CORE to zero with a call to

setrlimit.

There is no Windows equivalent of this call as Windows does not have

core dumps.

Mitigates: Core dumps.

Case intrusion detection:

Mitigates: Bus sniffing, some cold boot and rootkit attacks.

Hypervisor detection: Red pill http://northsecuritylabs.blogspot.nl/2008/06/catching-blue-pill.html http://www.zdnet.com/blog/security/rutkowska-faces-100-undetectable-malware-challenge/334 http://www.invisiblethingslab.com/resources/bh07/IsGameOver.pdf

Mitigates: Hypervisors.

Anti-rootkits: Hooksafe

Mitigates: Root kits.

Shielding: Tempest

Mitigates: EM/power/acoustic attacks. Requires special hardware.

Crypto hardware: Such as a Trusted platform module, a crypto token or x509 card. TPM does not work against cold boot. These are basically tiny computers, moving the problem from the original computer to a tiny external computer, that is designed from the ground up to be secure.

Mitigates: Depends on the quality of the hardware and the trustworthiness of the supplier. Requires special hardware.

Not using memory: Tresor, Frozen Cache and Loop-Amnesia avoid the problems of sensitive information in memory by not having it in memory at all. Instead the information is stored in the processor’s registers or in the processor’s cache, which is then prevented from flushing to memory. While interesting, this requires highly privileged instructions that need special operating system support. In virtual machines the instructions are likely emulated, and the host system will retain full access to the sensitive information. Practically, the register based solution barely has room for one key and the cache based option effectively disables the L1 cache of a single physical CPU core, which will ruin its performance. Also hibernate and sleep modes will wipe the processor’s registers and caches, so the sensitive information must be moved to another secure location before this can happen. For servers, PrivateCore's vCage uses the cache techniques to run a hypervisor in the processor’s cache. This hypervisor transparently encrypts and decrypts all memory access by the guest operating systems.

Mitigates: Hibernate files, swapping, core dumps, DMA access, cold boot attacks, and bus sniffing. Requires ring-0 instructions.

Practice at Coblue

At Coblue we developed a SecureByteArray class that implements many of

the strategies presented above.

The SecureByteArray class provides only provides methods for

construction, destruction and assignment. The size is fixed on

allocation and can only be changed through assignment by another

SecureByteArray. Semantically, it represents a piece of sensitive

information stored in memory. It does not provide any API for accessing

this information, not even a comparison operator. Instead, this is all

done through the classes ReadAccess and ReadWriteAccess. These can

only be stack allocated and hence they force the developer to use the

RAII pattern and explicitly declare the kind of access he needs in a

particular scope.

Internally the memory is layed out

TODO: Flush+Reload attack http://eprint.iacr.org/2013/448.pdf